Neural networks breakthrough sees computer start to think like a human for the first time

Finnish computer scientists have found a way to train neural networks to think more like the human brain.

Computer scientists in Finland have made a breakthrough in artificial intelligence research by using a neurobiological approach to get a deep learning neural network to detect objects in an image all by itself with an accuracy rate of 75%.

The human brain is a wondrous thing – it is so incredibly clever that it has taken decades of research and we are still nowhere close to being able to replicate the lightning-fast computational speed of the mind.

At the moment, the best that computer scientists can come up with are neural networks, which are large networks of artificially intelligent classical computers that are trained using computer algorithms to solve complex problems in a similar way to the human central nervous system, whereby different layers examine different parts of the problem and combine to produce an answer.

The problem is that neural networks require a huge amount of input and training from humans before they can understand how to solve a problem, such as by studying ImageNet, a huge visual database of one million human-annotated images that have been labelled by a human.

This is known as "supervised learning", and true artificial intelligence will not be possible until neural networks can learn how to autonomously perform "unsupervised learning", which is what Finnish start-up Curious AI is trying to achieve.

"The human brain does a lot of unsupervised learning. We don't have to tell a baby all the time that this is a spoon. They learn from the context automatically and they form the concept that this is interesting because someone is holding it in their hand, and they later associate a label for it to go with the concept," Curious AI's CTO Antti Rasmus told IBTimes UK at the Slush 2016 tech conference in Helsinki.

"Forming concepts from objects is easy for humans so we don't even think about it. It's been studied in psychology – known as the Gestalt Principles, where the human brain groups things that have a similar shape, colour, movement, patterns and so on. We're taking the first step to taking the deep learning systems to group objects in the same way the human brain does."

Applying neuroscience to artificial neural networks

In neuroscience, a concept called "rate coding" explains that the higher the neuron firing rate in the brain, the greater the activity rate in that particular neuron. Neurons are constantly firing, but in the 1980s, scientists realised that the neurons were grouping themselves together to represent different information.

This concept is known as "temporal coding" and the idea is that exact timing matters when the neurons fire, and the exact timing defines which neurons belong together in the midst of tens of thousands of neurons. So while some neurons firing together help the brain to pick out a specific object in the midst of a pile of objects – such as a red scarf surrounded by office stationery – other neurons explain to the brain that all the other objects are merely part of the background.

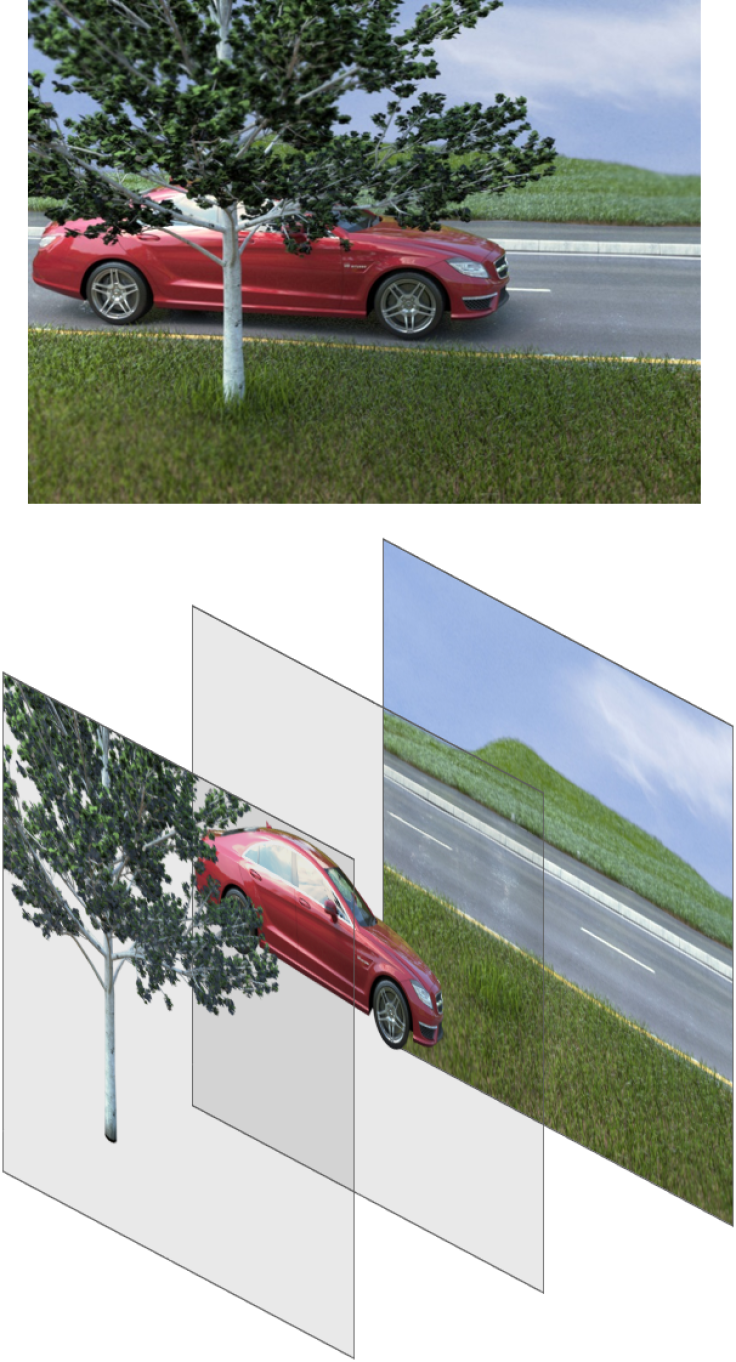

"Our computer algorithm implements temporal coding. We have many copies of each layer in a neural network. The whole network has been duplicated four times, meaning that the system learns that each of these copies represent one object and they combine them together so it matches with the original image," explained Rasmus, who previously worked in software engineering at Nvidia and is currently doing a PhD in deep learning at Aalto University.

"The network codes its own image by separating it into four different groups. It's unsupervised learning – we don't have any labels for the system, and when we show it the images, it learns to break down the image into its components (i.e. the objects in the image)."

Once the neural network is able to break the image down into separate components, it becomes much easier to classify and identify the objects, because they're not overlapping and obscuring each other.

Perceptual grouping could transform deep learning

The researchers first taught the neural network to study images and group objects completely unsupervised, then added annotated labels (supervised learning) in order to see what the system had learned, and they found that the Curious AI Tagger system was able to achieve an accuracy rate of 75.1%.

In comparison, the traditional neural network achieved an accuracy rating over only 21%, which is basically only 1% better than random luck, if you were to make a guess and happen on the right answer by chance.

"This is revolutionary research because it takes unsupervised learning one step closer to being solved. We get much more humanlike unsupervised learning by giving the machine the concept of an object. Further research can then use this to help neural networks do higher level reasoning about the object in relation to its surroundings," said Rasmus.

"In existing systems, computers operate in a statistical-based world-view. But to bring them to achieve something in the world we live in, it is crucial to get them to understand the world in the way we do. People typically fail to grasp how bad computer vision systems are as human vision is so natural to us."

The open-access paper, entitled, "Tagger: Deep Unsupervised Perceptual Grouping" will be presented on Wednesday 7 December at the Neural Information Processing Systems (NIPS) 2016 deep learning conference in Barcelona, Spain.

Curious AI is looking for industrial partners that would like to pilot its deep learning technology in real-world AI systems and is currently in discussion with car manufacturers who are keen to develop self-driving cars that can be truly autonomous.

© Copyright IBTimes 2024. All rights reserved.