Twitter: Microblogging site vows to crack down on trolls

Microblogging site Twitter has been under scrutiny for some time for its inability to tackle threats of violence and abusive behaviour by users, besides the use of the medium by terror organisations. After repeated failed reassurances, the company has now issued a new set of rules and guidelines that define what is abusive, hateful or inciting.

We've updated our rules around abuse and hateful conduct https://t.co/XGBETsUk5h

— Safety (@safety) December 29, 2015On 30 December, the company released a statement under the heading, "Fighting abuse to protect freedom of expression," listing out the primary changes and how they would work.

Today, as part of our continued efforts to combat abuse, we're updating the Twitter Rules to clarify what we consider to be abusive behaviour and hateful conduct. The updated language emphasizes that Twitter will not tolerate behaviour intended to harass, intimidate, or use fear to silence another user's voice. As always, we embrace and encourage diverse opinions and beliefs — but we will continue to take action on accounts that cross the line into abuse.

Under the new policy, Twitter will suspend or shut any user account that engages in "hateful conduct" or whose purpose is "inciting harm". The company had previously said users could not promote or threaten violence and in April added a ban on "promotion of terrorism." It also equipped users with tools with which they can block, mute and report abusive behaviour.

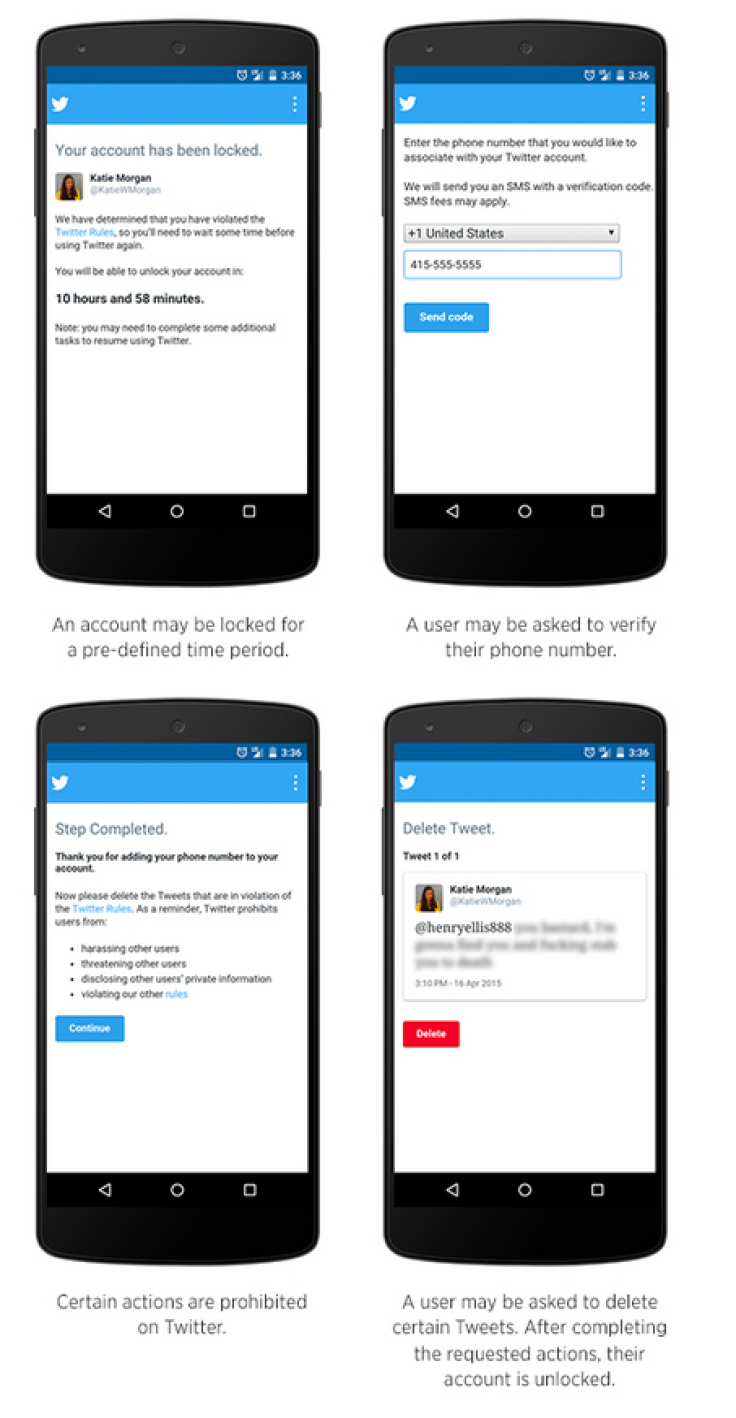

In case an account is reported and found to be in violation the site will block the account for a period of time and notify the user. Then users will be asked to verify their phone numbers and directed to delete the questionable tweets. The process is similar to one used by Facebook to block accounts.

This is the first time the company has come out strongly against specific violent behaviour against other people on the basis of race, ethnicity, nationality, sexual orientation, gender, gender identity, religious affiliation, age, disability or disease. However, implementation remains a challenge, which is why the company said it has increased resources by establishing a new Twitter Safety Centre.

In memos to employees in February, Twitter CEO Dick Costolo had taken personal responsibility for what he called an inadequate response to the chronic abuse and harassment that occurs daily on the social network, and saying: "The company sucks at dealing with trolls." It also vowed to take stronger action.

The new rules come at a time when cybersecurity and intelligence agencies are increasingly worried over the use of social media platforms like Twitter and Facebook to spread messages like those of the Islamic State (Isis). The recent attacks in Paris and the San Bernardino shootings in California have made authorities even more alert to terrorists' use of social media.

© Copyright IBTimes 2024. All rights reserved.