EU Commission Plans "AI Factories" To Boost Generative AI Uptake

The document represents the first step toward an industrial policy specific to AI, as countries continue to grapple with the regulatory challenges it presents.

The European Commission will propose the creation of "AI Factories" to help boost the uptake of generative AI in strategic sectors.

According to an early draft paper, the EU executive will present a "strategic framework" on AI use by the end of January.

The document represents the first step toward an industrial policy specific to Artificial Intelligence, as countries continue to grapple with the regulatory challenges presented by the technology.

The world's first AI conference was hosted by UK Prime Minister Rishi Sunak in November last year.

The summit concluded with the signature of the Bletchley Declaration – the agreement of countries including the UK, United States and China on the "need for international action to understand and collectively manage potential risks through a new joint global effort to ensure AI is developed and deployed in a safe, responsible way for the benefit of the global community".

While regulation laws are on the up and the European Parliament is set to vote on the new AI Act deal this year, no legally binding legislation will take effect until at least 2025.

The EU says their proposed initiative was made necessary by AI's rapid and disruptive acceleration, noting the critical role of foundation models like GPT-4, which gave rise to general-purpose AI systems such as OpenAI's ChatGPT and Google's Bard.

"Mastery of the latest developments in generative AI will become a key lever of Europe's competitiveness and technological sovereignty," reads the strategy, which outlines a strategic investment framework capitalising on the EU's assets.

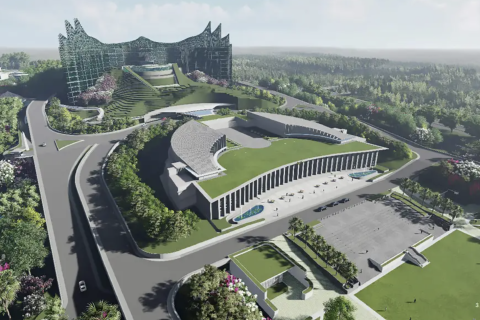

At the centre of the Commission's strategy are so-called 'AI Factories', described as "open ecosystems formed around European public supercomputers and bringing together key material and human resources needed for the development of generative AI models and applications."

Generative AI is defined as applications typically built using foundation models.

These models contain expansive artificial neural networks inspired by the billions of neurons connected in the human brain.

Foundation models are part of what is called deep learning, a term that alludes to the many deep layers within neural networks.

AI-dedicated supercomputers and close-by or well-connected 'associated' data centres using generative AI will form the physical infrastructure of the EU's proposed 'factories'. These new hubs are also intended to conduct unspecified "large-scale talent attraction activities".

Start-ups and researchers could access this computing power as a service throughout the EU, but first, they must prove that their work is 'ethical and responsible'. Joining the AI Pact, a voluntary initiative for early compliance with the AI Act is vented to demonstrate this commitment.

Earlier this month, many of the world's political and business elites gathered in Davos under the heading of "Rebuilding Trust", at a time when global cooperation is in vanishingly short supply.

Delegates debated the governance of AI, including a longstanding dispute on whether the technology should be open-sourced and made available to the general public, or kept secure in the hands of a few companies such as Google, OpenAI and Microsoft.

A new report published by the World Economic Forum claimed AI-powered misinformation is the world's biggest short-term threat.

With 2024 being dubbed by many as "the year of elections", their Global Risks Report expressed fears that a wave of artificial intelligence-driven misinformation and disinformation could influence democratic processes and polarise society.

Such a threat is the most immediate risk to the global economy, the document, released annually, concluded.

© Copyright IBTimes 2024. All rights reserved.