AI expert Nick Bostrom: Machine super intelligence will have no off switch

One solution would be to contain any advanced AI within a virtual reality simulation from which it cannot escape

The first artificial intelligence that surpasses human capabilities will be impossible to switch off, according to renowned AI theorist Nick Bostrom.

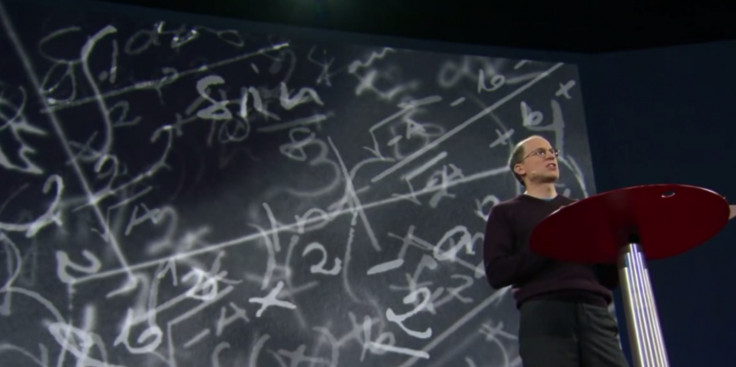

Speaking at a TED (technology, entertainment and design) conference, the Oxford University philosopher hypothesised about the threat posed by the intelligence explosion expected to take place within the next 30 to 40 years, warning there will reach a point of no return.

"You might say that if a computer starts sticking electrodes into people's faces, we'd just shut it off," Bostrom said. "This is not necessarily so easy to do if we've grown dependent on the system, like, where is the off switch to the internet?

"Why haven't the chimpanzees flicked the off switch to humanity? Or the neanderthals? They certainly had reasons.

"The reason is that we are an intelligent adversary. We can anticipate threats and plan around them. But so could a super intelligent agent and it would be much better at that than we are.

"The point is, we should not be confident that we have this under control."

Bostrom suggests that one solution would be to contain any advanced AI within a virtual reality simulation from which it cannot escape. This may be futile, according to Bostrom, as exploits and bugs in the software could be created by the AI to overcome this.

The other solution would be to create an AI that is "safe and fundamentally on our side" by having shared values with humanity.

These views echo those of other noted AI experts, including professor of cognitive robotics at Imperial College London Murray Shanahan.

According to Shanahan, the existential threat to humanity that advances in artificial intelligence poses can be negated by creating an AI that is not just human-level but also human-like.

This could either be achieved by pre-programming a computer with human emotions, such as empathy, or by using machine learning to allow it to understand human values. Exactly how either of these outcomes could be reached, however, may depend on first developing the AI.

"We need to be thinking about these risks and devote some resources to be thinking about this issue," Shanahan said during a recent lecture. "Hopefully we've got many decades to go through these possibilities."

© Copyright IBTimes 2025. All rights reserved.