Periscope to combat trolls and online abuse with new user-led moderation system

Periscope is rolling out a moderation system that will let users report comments and vote on whether a flagged comment should be removed. Offenders who repeatedly post abusive material or spam risk being blocked from broadcasts.

It's no secret that social media has a troll problem. Tackling hate speech, extremism and general nastiness is an ongoing commitment for internet companies, who are perpetually ramping up their efforts to make the online community a nicer place to be.

Periscope, the live-streaming app from Twitter, comes with a unique set of problems. Much like live television, moderating broadcasts in real-time is difficult, particularly as user comments appear on-screen without having to go through any sort filter process first. Periscope recognises that this system leaves the platform vulnerable to spam and abusive content and has now introduced tools to help curb this.

How it works

The new comment moderation system is community-led. In other words, it's up to Periscope users themselves to flag undesirable comments and get trolls blocked from broadcasts.

During a broadcast, viewers can report comments as spam or abuse. The viewer that reports the comment will no longer see messages from that commenter for the remainder of the broadcast. The system may also identify commonly reported phrases.

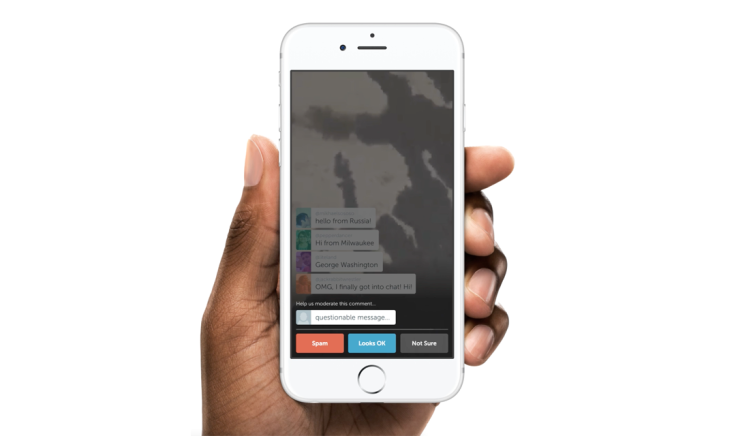

When a comment is reported, a handful of viewers are randomly selected to vote on whether they think the flagged comment is spam, abuse, or looks okay. The result of the vote is then shown to voters. If the majority votes that the comment is spam or abuse, the commenter will be notified that their ability to chat in the broadcast has been temporarily disabled.

Commenters that repeatedly post spam or abuse will be blocked from commenting for the remainder of the broadcast. Periscope says the system has been designed to be lightweight, with the voting system only taking a few seconds to complete. If users don't want to have their broadcasts moderated, they can opt out in the app's settings menu.

Periscope says the system has been designed to work in tandem with the tools it already has in place to protect users: users can still report ongoing harassment or abuse, block and remove people from broadcasts and only display comments from people they know, for example.

It said in a statement: "There are no silver bullets, but we're committed to developing tools to keep Periscope a safe and open place for people to connect in real-time. We look forward to working closely with you and everyone else in the community to improve comment moderation."

© Copyright IBTimes 2024. All rights reserved.