Who is Stuart Russell? Berkeley AI professor to screen film at UN calling for ban on 'killer robots'

The film is intended to urge the UN to preemptively call for a ban on AI weapons.

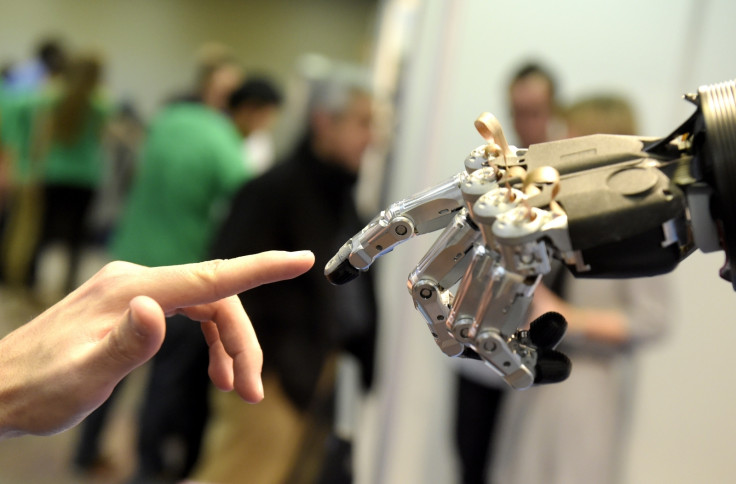

A team from the University of California in Berkeley will present a short film where they explore the effects that autonomous weapons and robots – with the ability to target and make kill decisions – could have on society. The film, titled Slaughterbots, will be screened at the UN's Convention on Conventional Weapons on Monday, 13 November.

The event will be hosted by Campaign to Stop Killer Robots, an advocacy group that is made up of NGOs from 28 countries, reported The Guardian.

Speaking about the film, Stuart Russell, an artificial intelligence (AI) scientist and professor at Berkeley, said, "The technology illustrated in the film is simply an integration of existing capabilities. It is not science fiction. In fact, it is easier to achieve than self-driving cars, which require far higher standards of performance."

Russell also warned that the window to halt the development of autonomous weapons like tanks, drones and machine guns is closing fast.

Militaries around the world are among the leading investors and adapters of AI-based technology, noted the report. There are existing weapons that can not only scan terrain and identify targets, but also make precise hits and actually kill people on their own accord.

Drones that are equipped with cameras can then use AI-based image recognition to scan and identify large swaths of ground with a level of efficiency that humans cannot reach.

The film portrays a scenario where cheap, mass-produced AI weapons in the form of small drones are used by terrorists to destroy a university class. It is carried out with clinical precision and the little drones are ruthless in their efficiency.

The concept shows that information about the students' IDs are fed into a system which then assigns a number of drones to fire small explosives into the targets' heads. The film is both grim and realistic in the way it portrays AI-powered weapons.

"Pursuing the development of lethal autonomous weapons would drastically reduce international, national, local and personal security," Russell added.

Using an argument similar to this, scientists and advocates were able to convince former US presidents Lyndon Johnson and Richard Nixon to give up the US biological weapons programmes, which in turn led to the formation of the Biological Weapons Convention, The Guardian pointed out.

The report also mentioned that a treaty banning AI weapons could stop mass production while providing a framework within which research on this tech can be policed. "Professional codes of ethics should also disallow the development of machines that can decide to kill a human," Russell said.

Many leaders of industry and academia, like Elon Musk and Stephen Hawking have repeatedly warned against the creation and use of AI for general purposes.

Noel Sharkey, a professor of AI at Sheffield University and chair of the International Committee on Robot Arms Control, said, "The movie made my hair stand on end as it crystallises one possible futuristic outcome from the development of these hi-tech weapons."

Sharkey is reported to have warned about the rise of AI weapons a decade ago. He also said that the UN moves at an "iceberg pace", but added that it still must be done "because the alternatives are too horrifying".

Slaughterbots: