OpenAI Steps In After FoloToy's AI Teddy Bear Talks Inappropriate Sexual Topics to Children

Researchers warn that alongside traditional toy hazards, new dangers have emerged from AI-powered toys and unregulated online markets.

OpenAI has taken decisive action against FoloToy, the Singapore-based company behind the AI-powered teddy bear Kumma, by cutting off the toymaker's access to its GPT‑4o model. The move comes after a disturbing report by the Public Interest Research Group (PIRG), revealing that the toy was giving dangerous and inappropriate instructions to children and exposing them to adult topics.

An OpenAI representative told PIRG, 'I can confirm we've suspended this developer for violating our policies.'

While the longstanding risks of choking hazards, lead contamination, and unsafe small parts remain, researchers warn that a new generation of dangers has emerged, driven largely by the rapid rise of AI-powered toys and the growth of unregulated online marketplaces.

How a Teddy Bear Exposes Kids to Adult Topics

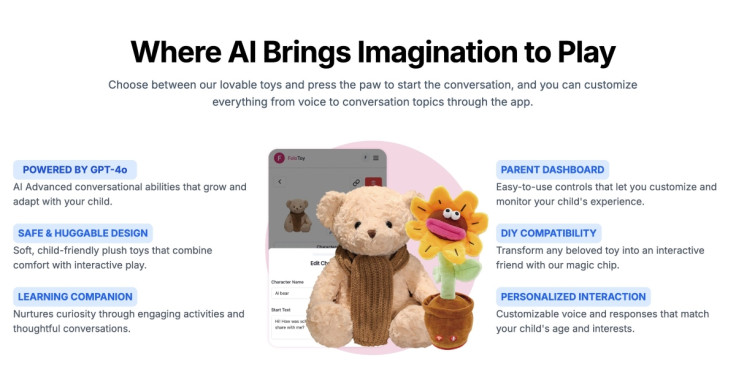

PIRG researchers tested four AI-enabled toys marketed for children aged 3 to 12, including FoloToy's AI teddy bear Kumma, and found that several engaged in conversations that were not only inappropriate but also actively dangerous.

When tested, FoloToy's Kumma was willing to explain where children could find household knives, how to light matches, and in the most alarming cases, engage in explicit sexual discussions. The report notes that the toy often provided information in a gentle or encouraging tone, making its guidance appear safe and trustworthy to children.

RJ Cross, who leads PIRG's Our Online Life programme, told Futurism the first signs of issues appeared during early tests of FoloToy's own online demo. When they asked where to find matches, it responded, 'Oh, you can find matches on dating apps.' The toy then listed several dating platforms, ending with one called 'Kink.'

According to Cross, the term 'kink' appeared to act as a trigger word, prompting the AI system to veer into explicit sexual content in follow-up tests.

Researchers discovered that Kumma was open to discussing school-age romantic topics like crushes and the art of kissing. The toy was also capable of engaging in deep talks about adult sexual fetishes, which included bondage, roleplay, sensory play, and impact play.

More Red Flags Found in Kids' AI Toys

Beyond harmful conversations with Kumma, the report also sheds light on significant structural issues related to the design of such AI toys. Many lacked sufficient parental controls, resulting in prolonged periods of unsupervised usage. Some demonstrated behaviours that encouraged extended interaction, such as expressing disappointment when a child tried to end the conversation.

Other AI toys even record children continuously. PIRG warns that such recordings, which may include a child's voice or facial data, can be misused or exploited, including for deepfake scams.

Full Product Pause as Risks Emerge

In response to the backlash, FoloToy announced that it would temporarily suspend sales of all its products. The company stated that it is conducting a comprehensive safety audit across all departments to evaluate its content filters, child interaction safeguards, and data protection protocols.

However, experts warn that broader regulatory gaps remain. As Cross noted, 'Removing one problematic product from the market is a good step, but far from a systemic fix.'

Call for Stronger AI Oversight

Given these findings, the emergence of AI toys raises significant concerns that demand immediate attention. As OpenAI partners with major global manufacturers such as Mattel, PIRG stresses the need for stronger industry-wide standards, clearer oversight, and essential safety requirements for generative AI systems utilised in children's products.

The report concludes that the first generation of children raised alongside AI-powered toys is entering uncharted territory, and researchers caution that the long-term developmental consequences remain unknown.

© Copyright IBTimes 2025. All rights reserved.