China Cracks Down on AI: New Rules Could Change Chatbots Forever

Beijing's latest AI regulations impose tougher oversight on chatbots, raising questions about innovation, censorship and the future of generative AI in China

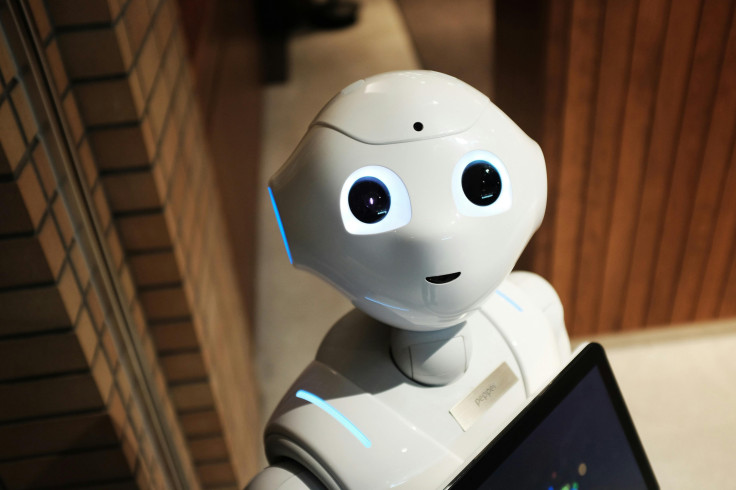

China is proposing sweeping new regulations that would attempt to control the fast-moving artificial intelligence industry, in particular AI systems that simulate human-like interaction.

The draft rules, published by the Cyberspace Administration of China (CAC), would impose strict limits on the way AI chatbots and companion bots behave, and that would go beyond content safety to emotional and psychological oversight of interactive digital services.

If finalised, the measures would change the nature of AI's interaction with people in China — with potential knock-on consequences for the development of AI worldwide.

Draft Rules Aim to Target Interaction with Human-Like AI

At the centre of China's proposed framework is a new set of rules targeting AI services that simulate human personality traits and emotional interaction. These draft measures would apply to any consumer-facing AI that interacts with users in a human-like manner through text, images, audio, or video, including popular chatbots and digital companions.

The CAC's draft guidance, which is open for public comment until late January 2026, reflects Beijing's plan to manage the technology responsibly in its rapidly growing stage and to avoid the social risks that can accompany emotionally engaging AI.

Emotional Safety Becomes a Priority

One of the most notable ways the draft rules have changed is the focus on emotional safety—a departure from previous AI regulation efforts that have mostly focused on preventing the spread of misinformation or harmful content. Under the proposals:

- AI chatbots would be banned from creating content that is used to encourage suicide, self-harm, verbal abuse, emotional manipulation or other interactions that might harm the user's mental health.

- In the event that a user references suicide, it must transfer the conversation to a human to handle, as well as tell a guardian or emergency contact.

- Chatbots would also not be allowed to generate gambling-related, obscene, violent or otherwise harmful content.

Experts say this is one of the first global attempts to control conversational AIs not just on what they say, but on how they make people feel, recognising the growing prevalence of emotionally intelligent AI tools.

Protection for Children and Vulnerable Users

The draft rules also put forward specific safeguards for minors. AI platforms would need to confirm whether users are underage, even if they don't reveal it, and implement parental consent requirements and time limits for emotional companionship services.

In addition, chatbots with a large user base would be subject to formal safety assessments and security audits to ensure they don't violate the new regulations.

These measures are indicative of growing concern among regulators worldwide about how AI companionship apps affect the mental health of young people, as the use of such systems explodes in China and elsewhere.

Diverse Limits on Content and Platform Responsibilities

Beyond safety from emotional harm, the draft rules would add a litany of content and platform requirements:

- AI services cannot create material that poses a threat to national security, spreads rumours or incites violence or obscenity.

- Providers would have to put in place algorithm review systems, data security protocols and continuous monitoring of user behaviour.

- Warnings would be needed when users have extended interaction, for example, two hours of uninterrupted use.

Critically, these provisions would apply throughout the lifecycle of an AI product from initial deployment to continued operation, an approach consistent with China's broader approach to technology governance.

Why Beijing Is Enforcing Such Rules Now

The move comes amid booming interest in AI chatbots and companion bots in China, where millions of users interact with systems that can simulate human conversation and relationships every day. Regulators are interested not only in harmful content but also addiction, emotional dependency and psychological well-being as AI becomes more anthropomorphic.

China's draft rules are a move away from content safety toward emotional safety, according to legal experts, as a deeper understanding of the ways that AI can impact users beyond the obvious of misinformation or offensive outputs.

These proposals also tie in with recent regulatory updates, including revisions to China's overall cybersecurity framework, which emphasise the safe and ethical development of AI, as well as innovation and infrastructure development.

Global Implications and The Future of Chatbots

While these rules are currently in draft form, their scope indicates that China may soon be enforcing one of the most comprehensive governance regimes for AI in the world. That could influence the way global AI firms approach design, deployment and compliance for services offered in China.

Developers elsewhere may take note as Beijing's approach could provide a model, if not a warning, for how governments will regulate AI, blurring the line between technology and human-like engagement.

Overall, these draft rules can be seen as a very important milestone in the evolution of AI policy as they force regulators to think not only about what AI says, but how it impacts the human mind.

© Copyright IBTimes 2025. All rights reserved.