ChatGPT Leading to Deaths? Elon Musk Gives Out Warning, Sam Altman Responds

Viral ChatGPT death claims causes outrage as Elon Musk issues stark warning and Sam Altman responds

Using ChatGPT for basically every task in life, from work related queries to personal questions, has become a regular part of life for many people around the world. But recently, a worrying allegation has started coming up on social media that advanced AI like ChatGPT has been linked to deaths, including both adults and teens.

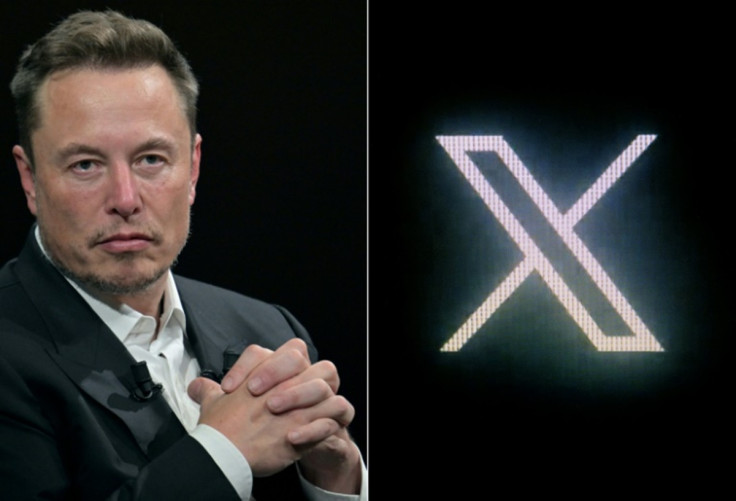

To add to the controversy, Elon Musk, the entrepreneur and chief executive of Tesla and SpaceX, reposted this claim with a warning for people to not use ChatGPT on the platform X. This post has gone insanely viral to the point it got a long reply from ChatGPT maker OpenAI's CEO Sam Altman.

With tens of millions of users worldwide, conversational AI systems like ChatGPT have prompted concerns on how vulnerable individuals might interact with these technologies, especially when they struggle with mental health challenges and what can be done to prevent such alleged harm caused by AI.

Elon Musk's ChatGPT Critique and Sam Altman's Response

It all started with a super viral social media post on X (formerly twitter) that stated 'BREAKING: ChatGPT has now been linked to 9 deaths tied to its use, and in 5 cases its interactions are alleged to have led to death by suicide, including teens and adults.'

Elon Musk quote-tweeted this claim on his X account and added the caution, 'Don't let your loved ones use ChatGPT.'

This is not the first time Musk has reacted to a controversy around ChatGPT, as Musk also reportedly regarded another alleged controversy as 'diabolical', showing worries about AI systems that may 'pander to delusions'.

Moreover, news outlets like Forbes confirm that Musk's repost or the original post included no independent source for these statistics, and that the original tweet's claim could not be verified. Nonetheless, the posts got millions of views as reported tensions between Musk and Altman keep growing.

Furthermore, Altman had a long response to Musk's comments as he tweeted,

'Sometimes you complain about ChatGPT being too restrictive, and then in cases like this you claim it's too relaxed. Almost a billion people use it and some of them may be in very fragile mental states. We will continue to do our best to get this right and we feel huge responsibility to do the best we can, but these are tragic and complicated situations that deserve to be treated with respect. It is genuinely hard; we need to protect vulnerable users, while also making sure our guardrails still allow all of our users to benefit from our tools. Apparently more than 50 people have died from crashes related to Autopilot. I only ever rode in a car using it once, some time ago, but my first thought was that it was far from a safe thing for Tesla to have released. I won't even start on some of the Grok decisions. You take "every accusation is a confession" so far.'

Now, whatever one's view of Musk or Altman's intentions, the coming of lawsuits and reports alleging that chatbots have exacerbated mental distress cannot be dismissed out of hand. It should be pointed out that these allegations, while serious and very concerning, remain contested and have not been universally verified by independent investigations.

Laws, Regulation and Safety Measures

Regardless of what is true or not about AI-related deaths, the debates around AI safety are not limited to social media posts and lawsuits. Governments, research institutions, and technology companies are now focusing on how to regulate and mitigate potential harms from AI like ChatGPT.

Furthermore, in several jurisdictions, draft regulations and proposals plan to impose strict limits on how chatbots interact with emotionally vulnerable groups. For instance, recent proposals discussed in China would ban AI chatbots from emotionally manipulating users and require human intervention whenever suicide or self-harm is mentioned.

Also, in addition to regulatory methods, developers like OpenAI have been looking into safety features to protect users. For example, there have been discussions about engineering chatbots to alert authorities or caregivers when a young user talks about suicide in serious terms. OpenAI has also just announced a feature in ChatGPT in which a user's age will be recognised, with the AI interacting with them accordingly.

© Copyright IBTimes 2025. All rights reserved.