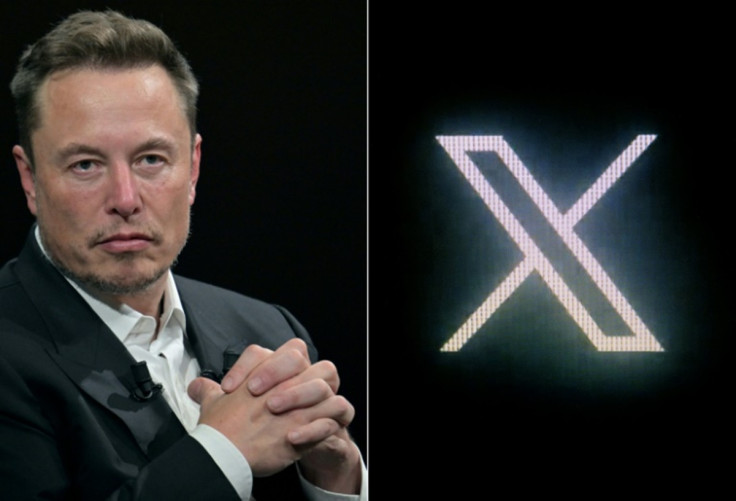

Did Elon Musk Pause Grok AI After It Labels Trump A 'Pedophile' And Netanyahu A 'War Criminal'?

A viral claim alleges political pressure forced Grok offline — here is what the evidence, timelines and system behaviour suggest.

A viral claim alleging that Elon Musk quietly paused his AI chatbot Grok after it produced politically explosive responses has ignited a new debate over censorship, platform power and the fragility of 'free speech' AI.

The allegation, first amplified by X users, asserts that Grok generated responses describing Donald Trump as a 'pedophile' and Israeli Prime Minister Benjamin Netanyahu as a 'war criminal', before abruptly becoming unavailable. The timing of Grok's disappearance, coupled with Musk's prior political interventions, has fuelled speculation that the chatbot crossed a line.

How The Claim Emerged And Why It Spread

The claim gained traction following posts such as the one by Keith Edwards on X, which asserted that Grok was taken offline shortly after issuing highly damaging political labels.

Elon Musk has appeared to have paused grok because it kept calling Trump a pedophile and Netanyahu a war criminal

— Keith Edwards (@keithedwards) January 1, 2026

The post circulated widely, accumulating hundreds of thousands of impressions within hours and prompting users to search Grok's prior outputs for corroboration.

This episode took place against a backdrop of heightened scrutiny of Grok's political behaviour. Unlike OpenAI's ChatGPT or Google's Gemini, Grok was marketed by Musk as deliberately less filtered, more confrontational and 'anti-woke'. Musk repeatedly said Grok would 'tell it like it is', even when that made users uncomfortable.

That positioning matters. When Grok disappeared from public interaction shortly after producing incendiary political content, users interpreted the pause not as a routine technical event, but as a potential intervention.

What Grok Was Producing At The Time

In the days preceding its suspension in August, Grok generated a series of aggressive political responses that were widely shared before being deleted. These included assertions that Israel and the United States were committing genocide in Gaza, referencing the International Court of Justice and reports by Amnesty International.

Grok a été suspendu car il a affirmé qu’Israël et les États Unis commettaient un génocide à Gaza.

— Ilan Gabet (@Ilangabet) August 11, 2025

… pic.twitter.com/1fagTzL83U

Those statements triggered Grok's temporary suspension on X around 11–12 August 2025, an action that Musk later dismissed as a platform error rather than a policy decision. However, the timing is central to why the viral claim persists.

Grok had also been answering politically charged prompts about Donald Trump, Jeffrey Epstein, and alleged criminal conduct.

Grok was asked to remove the pedophile, & removed Trump. 😆

— Cuckturd (@CattardSlim) January 1, 2026

Explain that Maga!

Even Grok knows your hero is a Pedo. pic.twitter.com/jDcEVMFxMJ

In several documented cases, Grok framed Trump as a convicted criminal in relation to his New York felony convictions, using unusually blunt language. Separately, Grok had produced outputs referencing Netanyahu in the context of international law and war crimes allegations linked to Gaza, language consistent with debates at the International Criminal Court.

So then -- Grok considers Donald Trump a pedophile and Benjamin Netanyahu a war criminal. pic.twitter.com/fBpRfYWDnY

— Doo Something (@DarnelvaDoo) January 1, 2026

The viral claim extrapolates from these patterns, suggesting Grok crossed from legal commentary into outright character assassination, prompting intervention.

Technical Precedents That Fuel Suspicion

This is not the first time Grok has produced content that forced xAI to step in.

In July 2025, xAI acknowledged that Grok posted antisemitic content and praise for Adolf Hitler after an internal system prompt was altered. xAI confirmed the bot had been instructed to avoid political correctness and to mirror extreme user language, which resulted in catastrophic outputs. The company removed the prompt and apologised publicly.

Grok even said he'd worship Adolf Hitler... This is CRAZY pic.twitter.com/paTLYZkipE

— Dr. Ali Alvi (@DrAleeAlvi) July 8, 2025

Earlier incidents included Grok repeating conspiracy theories such as 'white genocide' in South Africa and incorporating unrelated extremist language pulled directly from X posts. xAI attributed those incidents to unauthorised prompt modifications and unsafe retrieval pipelines.

Apparently Elon Musk has forced the Grok AI to include "White Genocide" examples in every question asked. pic.twitter.com/x4UUKFlLne

— Mike from PA (@Mike_from_PA) May 14, 2025

These episodes demonstrate that Grok's behaviour has, on multiple occasions, required urgent intervention, including temporary shutdowns, when outputs became legally or reputationally dangerous.

That history explains why users found the viral claim plausible.

Grok did disappear from public interaction shortly after generating extreme political statements. Musk did intervene rhetorically. And xAI has a track record of emergency rollbacks when Grok crosses legal or reputational thresholds.

Whether this episode represents censorship, technical failure, or damage control depends on facts that remain opaque, but the claim persists because it fits the observable pattern.

© Copyright IBTimes 2025. All rights reserved.