Google AI's new algorithm will let smartphones read sign language

The new algorithm will use real-time hand tracking to let smartphones read sign language.

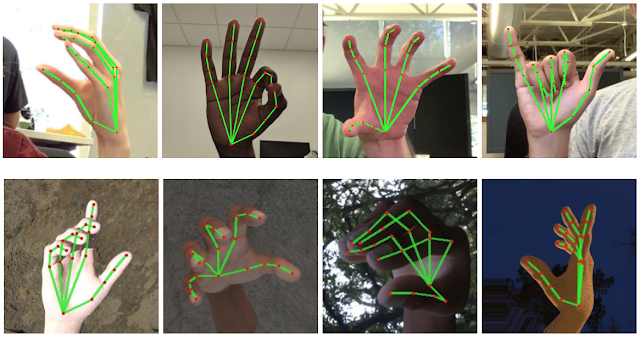

Smartphones will soon be able to read sign language using a new artificial intelligence (AI) based algorithm designed to track the movement of hands. The algorithm, designed by Google's AI Labs, will provide a smartphone with the ability to perceive hand movements and shapes across a variety of platforms.

The company stated in its blog on the Google AI website on Monday, that its algorithm will let smartphone users read sign language using Augmented Reality. Real-time hand perception is a hard task since human actions are generally unpredictable. Hands block each other and lack patterns with high contrast.

"Today we are announcing the release of a new approach to hand perception, which we previewed CVPR 2019 in June, implemented in MediaPipe—an open-source cross-platform framework for building pipelines to process perceptual data of different modalities, such as video and audio. This approach provides high-fidelity hand and finger tracking by employing machine learning (ML) to infer 21 3D keypoints of a hand from just a single frame," Valentin Bazarevsky and Fan Zhang, Research Engineers at Google stated in an official post on the subject.

The algorithm will use Machine Learning to understand and learn patterns, remember them and create data modalities. It will infer 21 3D keypoints on hands in every single frame.

Such algorithms have previously been used with PCs but this is the first time that a smartphone will be made capable of executing such actions. Three models will work together – a palm detector, a hand detector and gesture recognition to make it work. Instead of tracking the whole hand. All the system needs to do is track the palm and it will be able to read hand movements. Every finger will be analyzed separately to create proper interpretations.

Once a pose is determined, it will be tallied with the poses already remembered by the system and a combination of these will be recognized as a human word.

Google has opened up the source code so that other researchers can use it to build on the work it has already done.

"We hope that providing this hand perception functionality to the wider research and development community will result in an emergence of creative use cases, stimulating new applications and new research avenues," the company has stated.

© Copyright IBTimes 2025. All rights reserved.