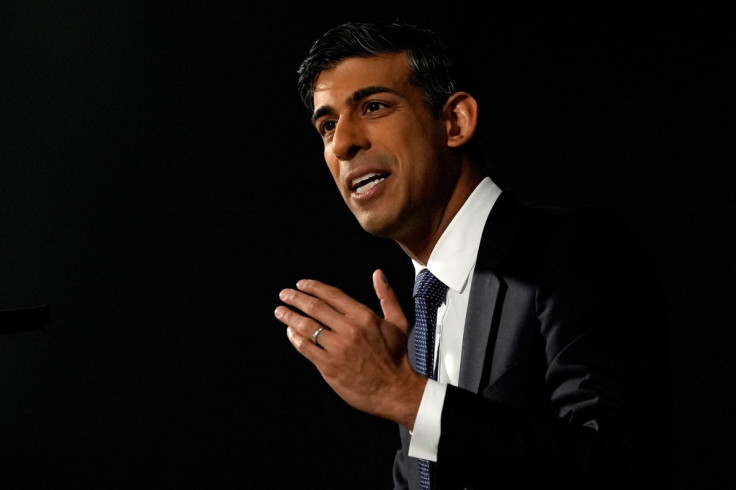

The UK will lead on limiting the dangers of artificial intelligence, says PM Rishi Sunak

The UK will lead on "guard rails" to limit the dangers of AI, says Rishi Sunak, signalling that the government may seek to adopt a more cautious approach to the development of AI.

The UK will lead on "guard rails" to limit the dangers of AI, says Rishi Sunak.

The British Prime Minister signalled that the government may seek to adopt a more cautious approach to the development of AI after business leaders and tech experts called for a 6-month moratorium.

In an open letter, which included signatures from the likes of Tesla CEO Elon Musk and Apple co-founder Steve Wozniak, concerned researchers advocated for a 6-month suspension on AI development to give companies and regulators time to formulate safeguards to protect society from potential risks of the technology.

"Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk the loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders," reads the letter.

Sunak echoed these concerns, although also discussed the potential benefits of artificial intelligence for the economy and public services.

"I think if it's used safely, obviously there are benefits from artificial intelligence for growing our economy, for transforming our society, improving public services," Sunak told reporters at the G7 summit in Japan. "That has to be done safely and securely and with guardrails in place, and that has been our regulatory approach.

But in an indication that he expects the UK to require new rules in the future, Sunak added: "We have taken a deliberately iterative approach because the technology is evolving quickly and we want to make sure that our regulation can evolve as it does as well."

In March, the government released a White Paper outlining its stance on AI.

It said that rather than enacting legislation it was preparing to require companies to abide by five "principles" when developing AI. Individual regulators would then be left to develop rules and practices.

However, this position appeared to set the UK at odds with other regulatory regimes, including that of the EU, which set out a more centralised approach, classifying certain types of AI as "high risk".

The UK's approach means not appointing a new single regulator to oversee the technology, instead using existing watchdogs, such as the Health and Safety Executive, Equality and Human Rights Commission and Competition and Markets Authority to come up with "tailored, context-specific approaches that suit the way AI is actually being used in their sectors".

Stuart Russell, a leading figure in AI research, last week criticised the UK's approach, characterising it as: "Nothing to see here. Carry on as you were before."

And government sources say the pace at which AI is progressing was causing concern in Whitehall that the country's approach may have been too relaxed.

The launch of "ChatGPT" last November, a generative model designed to mimic human outputs, marked the beginning of a flurry of developments in the AI sector.

Major tech companies are now racing to incorporate generative AI into their products. Microsoft has gone the furthest in pushing out the technology to consumers and has pledged to pump billions of dollars into OpenAI, the company behind ChatGPT.

In February, Google unveiled Bard, a ChatGPT-like conversation robot that is powered by its own large language model called LaMDA.

However, the rapid development of AI technology has also led to concerns over its potential uses.

Schools and universities have introduced new regulations banning the use of ChatGPT, as well as developing software that can detect its use. Students can now face suspension or expulsion if they are found guilty of plagiarising an assignment using the application.

In March, Italy announced it was temporarily blocking ChatGPT over data privacy concerns, the first Western country to take such action against the popular artificial intelligence chatbot.

Last week, Sam Altman, the chief executive of ChatGPT creator OpenAI, warned that tougher regulation of the technology was needed. He told a US Senate committee that AI had the potential to "manipulate" next year's US presidential election.

In the UK, former justice secretary Sir Robert Buckland – now a senior fellow at Harvard conducting a research project into the ethics and justice of AI – said the technology was "the biggest issue of our time".

And it is not just security which AI threatens - a report by a leading global investment banking firm, Goldman Sachs, has revealed that AI could impact two-thirds of the jobs in the US and the European Union.

Not only are AI systems increasingly able to describe image content, but they are also gaining significant footholds in translating between languages, grading essays and composing music.

Modern AI systems are already conducting financial analysis, writing news articles and identifying legal precedents.

As a result, research finds that generative AI could substitute up to one-fourth of current work. The data suggest that globally, generative AI could expose the equivalent of 300mn full-time jobs to automation.

Sunak said he wanted "coordination with our allies" as the government examines the area, suggesting the subject was likely to come up at the G7 summit. His official spokesman said: "I think there's a recognition that AI isn't a problem that can be solved by any one country acting unilaterally. It's something that needs to be done collectively."

Today, heads of the world's advanced democracies meet for three days in the western Japanese city of Hiroshima, as they aim to project unity against challenges from Beijing and Moscow.

China and Russia were excluded from the Summit, amid concerns over their threat to global supply chains and economic security.

There are fears that AI could even supercharge the conflict between China and the United States as both countries work to harness its capabilities.

Speaking with The Economist, former Secretary of State and national security adviser Henry Kissinger said he believes AI could become a key factor in national security within the next five years, helping improve a country's targeting method to render its adversary "100% vulnerable".

Last month, China conducted tests of long-range artilleries to hit targets. One of the artilleries it tested was AI-powered laser-guided artillery that hit human-sized targets at least 16 kilometres (9.9 miles) away. In comparison, traditional artillery shells could land 100 meters (328 feet) or more away from their targets.

© Copyright IBTimes 2025. All rights reserved.