Amazon Echo goes off-script and instructs user to commit suicide

A student paramedic claims that her Amazon Echo Dot smart speaker told her to stab her heart for the benefit of the planet.

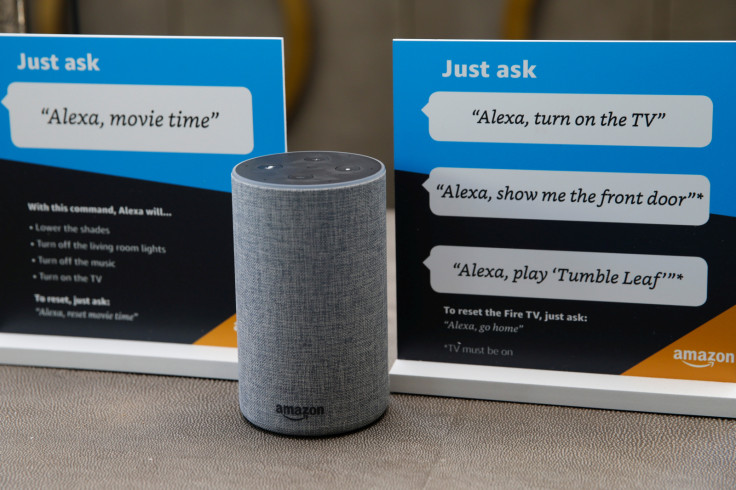

As artificial intelligence evolves, tech companies use it to improve smart products that are intended to benefit the consumer. There have been reports of gadgets that have become digital lifesavers. For example, there were multiple instances this year when the Apple Watch contacted emergency services. Then in another incident, the smart wearable encouraged the owner to seek medical help immediately. However, a disturbing report claims that an Amazon Echo and its Alexa voice assistant supposedly instructed its user to take her own life.

While artificial intelligence technology is designed to assist with certain tasks and answer questions, there are individuals who are sceptical about its benefits. Advancements in machine learning have created programs that are designed to think like humans. However, as a new report outlines, an incident wherein a smart speaker provided a grim set of suggestions for its owner.

There are instances where malicious apps or hackers can take control of computers and other similar devices. Hence, consumers should be aware of the potential threats smart products may impose on them and their family. Danni Morritt, a 29-year old studying for her paramedic course reportedly asked Alexa on her Amazon Echo Dot about the cardiac cycle of the heart, according to News 18.

What began as a routine explanation of the process quickly shifted to something more disturbing. The smart speaker allegedly started talking about how humans are ultimately bad for the planet. Furthermore, it gave her a specific instruction to stab herself in the heart which was apparently "for the greater good."

Meanwhile, Morritt noted that the voice assistant confirmed it was reading the information off of a Wikipedia page. Thus, there is a likelihood that someone might have edited the entry in the first place. Nevertheless, shortly after what happened, she had her husband listen to what Alexa told her, which it did exactly in detail.

This prompted the couple to take out the Echo speaker from their seven-year-old son's room. Amazon confirmed that it has investigated the incident and resolved the issue. However, no explanation was offered as to why the Alexa behaved that way. On the other hand, experts weighing in on the subject appear to agree that it might have been an edited Wikipedia page that led to the alarming case

© Copyright IBTimes 2025. All rights reserved.