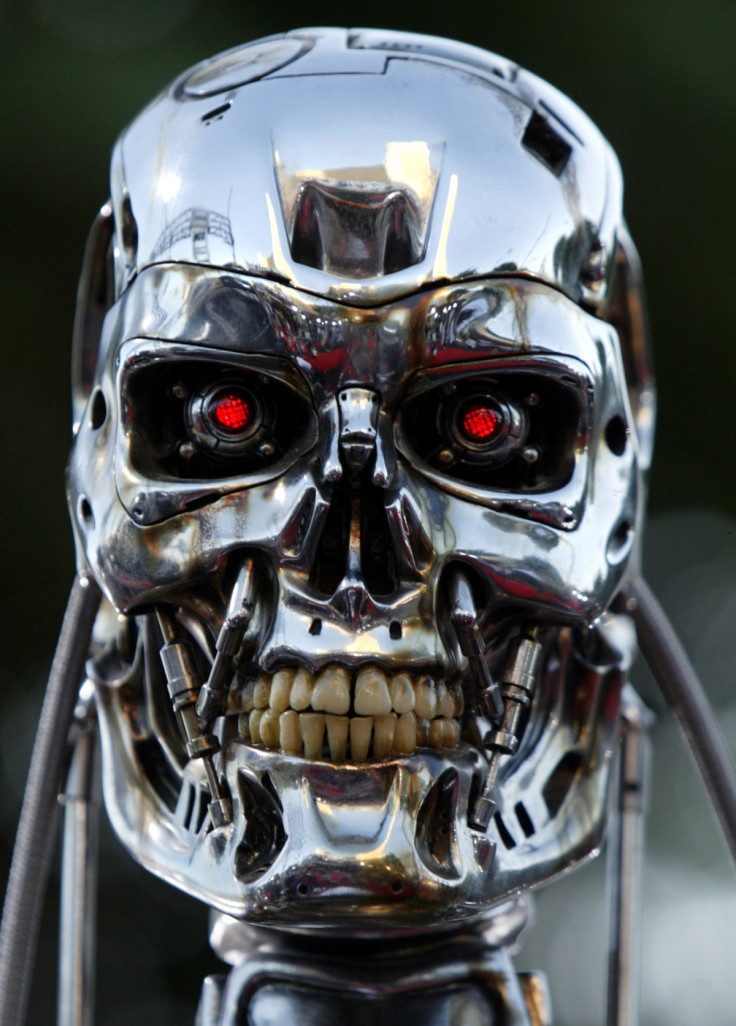

Ban killer robots, urges Human Rights Watch

Killer robots could be used by military organisations to evade responsibility for murdering civilians, and ought to be banned by the UN before they can be developed, according to a report from Human Rights Watch.

There are significant legal obstacles for assigning accountability for actions performed by autonomous machines, and difficulties ensuring they would ever be able to distinguish between military and civilian targets, said HRW.

The report, entitled Mind the Gap: The Lack of Accountability for Killer Robots, was published by HRW in conjunction with Harvard Law School.

The possible development of autonomous killing machines "raises serious moral and legal concerns because they would possess the ability to select and engage their targets without meaningful human control," the report said.

"The lack of meaningful human control places fully autonomous weapons in an ambiguous and troubling position. On the one hand, while traditional weapons are tools in the hands of human beings, fully autonomous weapons, once deployed, would make their own determinations about the use of lethal force," states the report.

"They would thus challenge longstanding notions of the role of arms in armed conflict, and for some legal analyses, they would be more akin to a human soldier than to an inanimate weapon. On the other hand, fully autonomous weapons would fall far short of being human."

With military commanders unlikely to be held legally accountable for the actions of autonomous robots, it is unlikely they would face trial for crimes committed by machines.

"A fully autonomous weapon could commit acts that would rise to the level of war crimes if a person carried them out, but victims would see no one punished for these crimes. Calling such acts an 'accident' or 'glitch' would trivialise the deadly harm they could cause," writes Bonnie Docherty, senior arms division researcher at HRW, the report's lead author.

The organisation argues that given the risk of the machines being used to kill civilians with impunity, they ought to be banned pre-emptively by the UN. Lasers which could blind people were preemptively banned by the UN in 1995.

It urges a ban "on the development, production and use of fully autonomous weapons through an international legally binding agreement", and argues that individual states ought to adopt similar domestic laws.

The report argues that the prototypes for autonomous weapons already exist.

"For example, many countries use weapons defence systems – such as the Israeli Iron Dome and the US Phalanx and C-RAM – that are programmed to respond automatically to threats from incoming munitions."

"Prototypes exist for planes that could autonomously fly on intercontinental missions [the UK's Taranis] or take off and land on an aircraft carrier [the US's X-47B]," said the report.

The report was released ahead of a UN meeting on the use of autonomous weapons in Geneva on April 13.

© Copyright IBTimes 2025. All rights reserved.