Speech-imitating algorithm can steal your voice in 60 seconds

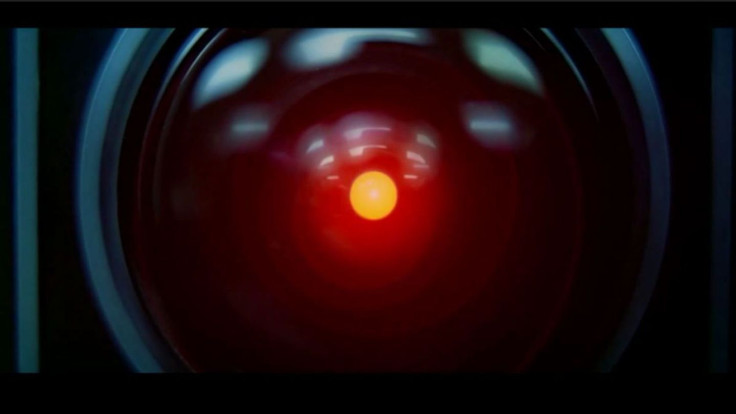

Machine learning algorithm can mimic other peoples' voices or create new ones from scratch.

A Canadian start-up has developed a voice imitation programme capable of mimicking a person's voice after just a minute of listening to them speak.

Developed by AI firm Lyrebird, the algorithm uses machine learning to synthesise speech based on audio samples and is even able to replicate emotion.

Lyrebird's algorithm is capable of generating new voices from scratch as well as replicating those of others. After hearing an audio clip, the programme determines the defining feature or "key" to the person's voice and then uses this to generate words from scratch. It even varies the intonations it applies so that a repeated sentence doesn't sound the same way twice.

To demonstrate its capabilities, the company replicated the voices of Barack Obama, Donald Trump and Hillary Clinton uploaded the audio clips to its website.

The results are impressive, although some of the replications are undoubtedly more convincing than others. It's important to note that Lyrebird's tech is still in its beta phase, meaning it's likely to get better as the company perfects the algorithm.

The company eventually hopes that the technology will allow people to craft digital assistants based on their own likenesses, as well as offer new speech synthesis solutions for people with disabilities.

Speech synthesis and recognition technologies have come a long way in the past few years, aided by a burgeoning digital assistant marketplace dominated by the likes of Amazon Alexa, Google Assistant and Siri.

Voice recognition has come so far that some banks are now using it as an alternative to passwords, claiming that the technology not just quicker but more secure than the traditional log-in methods.

'Dangerous consequences'

Yet recent advancements in the field have thrown these claims into question. In November, Adobe demonstrated a piece of software capable that could record human speech and then rearrange words to essentially make it say anything it wanted, indicating that our voices aren't quite as forge-proof as we might have hoped.

Lyrebird is clearly aware of these concerns, going so far as to admit that the technology could have "dangerous consequences" should it be misused for nefarious purposes, namely identity theft. It also points out than as speech synthesis technology advances, audio recordings could become less creditable as pieces of evidence in court proceedings.

Unfortunately, the start-up doesn't offer much in a way of a solution to this hypothetical problem, instead suggesting that the more people that have access to its API, the less risk there is of it being used for fraud.

"By releasing our technology publicly and making it available to anyone, we want to ensure that there will be no such risks," the company said. "We hope that everyone will soon be aware that such technology exists and that copying the voice of someone else is possible."

© Copyright IBTimes 2025. All rights reserved.