What are AI neural networks and how are they applied to financial markets?

People have been using artificial neural networks to try and predict financial markets for decades. In the new millennium, the algorithms that train the networks to do such things have improved, but not fundamentally changed: what has changed is the raw computing power driving them, which has increased by half a dozen orders of magnitude in the last 30 years.

In a few decades a cheap computer may compute as much as all human brains combined – and everything is going to change; every aspect of civilisation is going to be affected and transformed by that.

So now we are experiencing another AI summer; events like Google buying the machine learning system Deep Mind for $600m made big headlines, as do rumours of secretive hedge funds forming machine learning divisions to do quantitative modelling of markets.

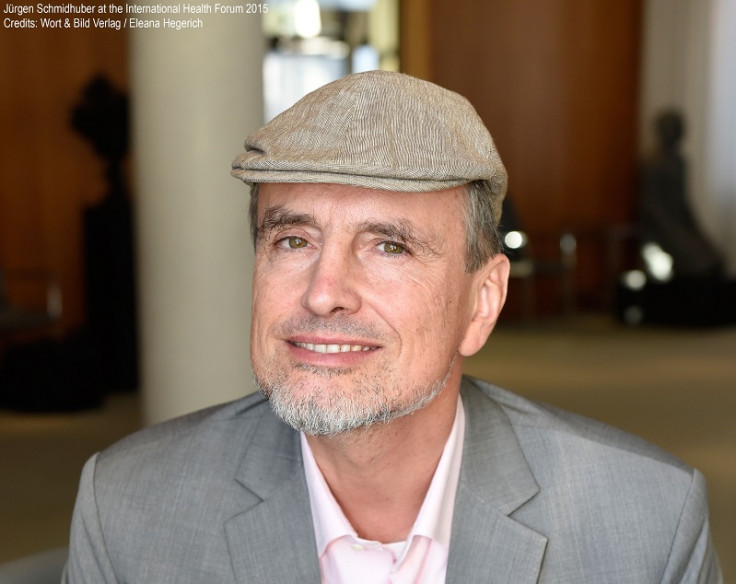

"It's a rather old hat," says Professor Jürgen Schmidhuber, pioneer of widely used methods for deep learning in neural networks which his research group has published since 1991. His team's algorithms are now driving the speech recognition in Android phones, translation from one language to another, or image understanding.

He added: "Although some hedge funds don't know too much about AI they act as if they did, hinting at amazing secret algorithms they cannot to talk about without risking informing the competition."

Schmidhuber said people have used neural networks to predict stock markets with varying degrees of success. The difference today is that computers are one million times better than 25 or 30 years ago and you can train networks that are a million times bigger than those used back then. Asked if his award-winning technology is a good fit for finance, Schmidhuber said: "to some extent, although quite a few modifications are required."

Jürgen Schmidhuber is a Professor at the University of Lugano. Since the age of 15 his main goal has been to build an Artificial Intelligence smarter than himself, then retire.

The Deep Learning Artificial Neural Networks developed since 1991 by his research groups at the Swiss AI Lab IDSIA (USI & SUPSI) and TU Munich have revolutionised handwriting recognition, speech recognition, machine translation, image captioning, and are now available to over a billion users through Google, Baidu, Microsoft, IBM, and many other companies (DeepMind also was heavily influenced by his lab).

His team's Deep Learners were the first to win object detection and image segmentation contests, and achieved the world's first superhuman visual classification results, winning nine international competitions in machine learning and pattern recognition.

His research group also established the field of mathematically rigorous universal AI and optimal universal problem solvers. His formal theory of fun & creativity & curiosity explains art, science, music, and humour.

He has published 333 papers, earned seven best paper/best video awards, the 2013 Helmholtz Award of the International Neural Networks Society, and the 2016 IEEE Neural Networks Pioneer Award. He is president of NNAISENSE, which aims at building the first practical general purpose AI.

Noisy data

With stocks and shares, you have a lot of noise in the data, and the ratio between noise and signal is very high. Some hedge funds are hiring physicists or astronomers with a track record of making sense of tiny signals among noisy data points. For decades machine learning has been an ingredient in these models to improve the predictability of certain events. This can help to increase the expected value of your investment. On the other hand, you can't make sure that it's not going to go down because there are unpredictable events including crashes which sometimes seem to come out of nowhere.

Schmidhuber said that variants of networks with millions of connections that learn to recognise or predict videos can also be applied to financial data. "Streams of real numbers come in, representing time series such as stock chart data. One can try to use that to predict tomorrow's stock market.

"But one can also use all kinds of additional data, such as newspaper articles or even facial expressions of traders, which your system might learn to translate into better predictions of stock market prices in the next second or hour or day or whatever.

"All of that is just a mapping from sequences of data points to other sequences of data points and you can learn such a mapping through our deep (usually recurrent) neural networks and then over time you can test your system based on unseen data, because nobody cares if you are performing well on the past data; the only thing that counts is how well you perform on the unseen data."

A deep learning model must become adept at ignoring "noise" and irrelevant information within data patterns. "The network has to learn from the data which of these many events are noise or non-informative stuff to be ignored. The network adjusts its connections to get rid of the noise and to focus on important things; on the signal as opposed to the noise. In principle, the network can learn that by itself."

Animal-like AI

A human brain has 10 billion processors at least in the neocortex where the interesting computations take place and each of them is connected to 10,000 other neurons. Artificial networks are still much smaller than that, but they are getting there.

"Your brain has over 100,000 billion connections. Our largest artificial networks at the moment have only maybe a billion or so. But every ten years computers are getting faster by a factor of 100 per British pound, which means that in 30 years you gain a factor of a million," said Schidhuber.

Schmidhuber believes that within only a few decades we will have something like human-level artificial intelligence. His next goal is animal-like AI; something akin to a capuchin monkey that can abstractly reason about the world, look ahead and plan in a way that our machines cannot do yet well. He thinks that the step from animal-level AI to human-level AI will not be huge because it took evolution much longer to go from zero to a monkey than from a monkey to a human.

"Everything is going to change in a way that is hard to predict. In not so many years we will have cheap computers with the raw computational power of a human brain. A few decades later, a cheap computer may compute as much as all human brains combined – and everything is going to change; every aspect of civilisation is going to be affected and transformed by that.

"However, one man like myself trying to predict the future of civilisation and its machines, that's a bit like a single neuron in my brain trying to predict what my entire brain is going to do."

Recurrent Neural Networks are the Deepest

Today's most powerful networks are deep, with many subsequent computational stages. Recurrent networks with feedback connections are the deepest of them all, with theoretically unlimited depth.

The most widely used method of this kind is called "Long Short-Term Memory," a deep learning method first published by Hochreiter and Schmidhuber in 1995, and further developed since then in Switzerland and Munich.

Think of a black and white video, a sequence made of many images, each containing one million pixels. Each pixel is white or black or something in between, and encoded by a number. So for example, 1.0 would mean totally white and 0.0 would mean totally black, and a value of 0.3 means grey. A million numbers encode the content of a single image.

The recurrent neural network is made of simple processors called neurons, each producing a sequence of real-valued activations. Input neurons get activated through pixels of incoming images, other neurons through connections with real-valued weights from previously active neurons. Input neurons get activated through pixels of incoming images, other neurons through connections with real-valued weights from previously active neurons.

At a given time, each non-input neuron typically computes its activation as a weighted sum of its incoming activations and squeezes down the result again to the interval between zero and one, through a squashing function, a non-linear thing. The recurrent connections allow for short-term memories of previous observations.

Imagine an output neuron whose activation at the end of the video should be 1.0 if the video contained, say, a flying bird, and zero otherwise. In the beginning the network is randomly pre-wired. It knows nothing about birds, just producing stupid results as a reaction to the input stream. However, through learning it decreases the difference between what it should have said and what it really said. The many connections are adjusted such that the network becomes better at recognising flying birds and distinguishing them from flying bats and sitting birds, etc.

© Copyright IBTimes 2025. All rights reserved.