Facebook bot problem:Users forced to upload selfies to prove they are real

Like rival social networking websites, Facebook has a major bot problem.

Facebook, the world's largest social network, has confirmed that it is attempting to "catch suspicious activity" by making users upload selfies to help prove they are real humans.

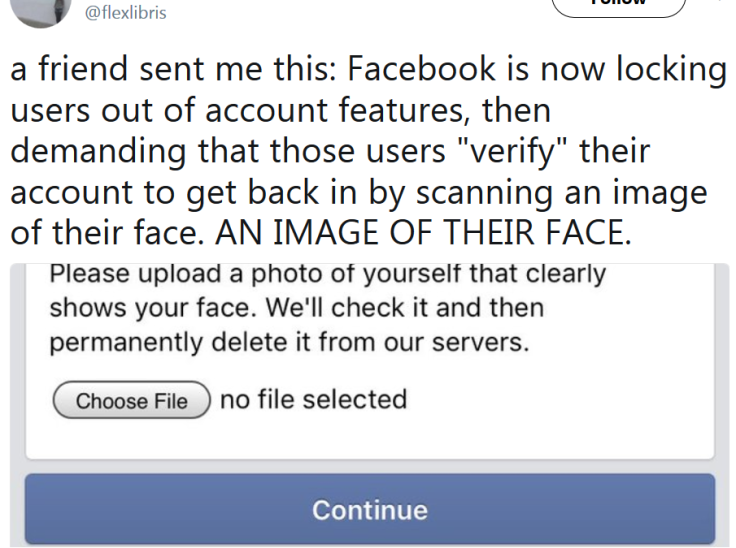

A screenshot widely-shared across Twitter suggested the platform was now using a new checkpoint system in place of the traditional "captcha" verification process.

"Facebook is now locking users out of account features, then demanding that those users 'verify' their account to get back in by scanning an image of their face," the tweet which highlighted the issue read.

It showed a notice claiming the website would check images and then "permanently delete" them.

As speculation mounted, Facebook confirmed to Wired the system was real, explaining it was designed as an automated way of checking if an account is legitimate.

A spokesperson said it was created to "catch suspicious activity at various points of interaction on the site, including creating an account, sending friend requests [and] setting up ads payments."

Like rival social networks, Facebook has a significant bot problem.

It was accused this year of playing a key role in the Russia-led misinformation and propaganda campaign during the 2016 US presidential election by selling targeted advertising to troll farms.

While it remains unclear how long the selfie-style verification system has been in place, there are a numberous threads and complaints on Reddit from in April.

One frustrated post read: "I started by uploading pictures that had already been taken, then took a couple of myself. Every time it says the picture is invalid."

The top comment read: "I just discovered the same problem. I don't even post anything to Facebook, including pictures, so I don't know how my picture would help. I only use it because you have to have a Facebook account to create accounts on certain websites or comment on certain sites.

"Since it wouldn't serve any practical purpose, and I don't trust Facebook with my picture at all, I'm not going to post one, but still, I would like my account back."

Over the past month, Facebook has touted a number of ways that its machine learning and artificial intelligence (AI) technology is helping to protect users from fake or abusive accounts.

In one instance, it claimed users could help cut down on revenge porn by uploading their nude pictures to the platform. More recently, it said AI was being introduced to bolster the "proactive detection" of suicidal users by recognising updates that could be considered of concern.

On Wednesday (29 November), it said AI was becoming more effective at helping to detect terrorist content. Facebook claimed that 99% of such material is now first found by automation.