AI neural network succeeds in identifying faces - even from censored or blurred photos

Computer scientists have programmed a neural network to recover hidden information from censored images.

Computer scientists in the US have succeeded in training an artificially intelligent neural network of computers to recognise faces from photographs, even if they have been deliberately censored or blurred to preserve the subject's privacy.

Researchers from the University of Texas at Austin and Cornell University have developed computer algorithms that make it possible to train neural networks to identify faces, recognise objects and even numbers handwritten on paper, even if the images are being protected by image obfuscation software.

Their research, entitled "Defeating Image Obfuscation with Deep Learning", is published on Cornell University Library's open source database.

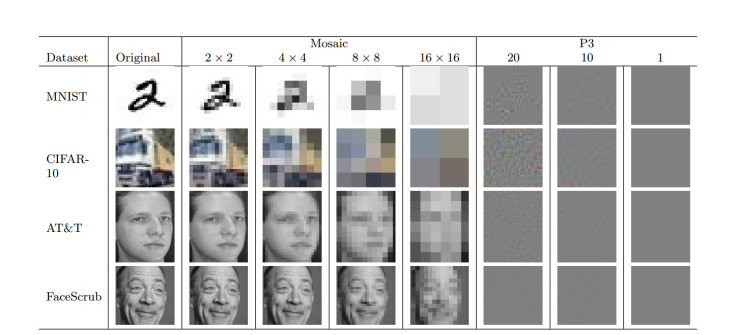

At the moment, the top image privacy protection technologies include blurring (used by YouTube), mosaicing (pixellation) and Privacy Preserving Photo Sharing (P3), a tool that encrypts part of a Jpeg photo file in order to keep it secure.

Neural networks are large networks of artificially intelligent classical computers that are trained using computer algorithms to solve complex problems in a similar way to the human central nervous system, whereby different layers examine different parts of the problem and combine to produce an answer.

The researchers trained the neural networks to look at four different datasets of images of faces that were clearly visible, while other images had privacy protection technologies applied to them. One of the datasets consisted of videos featuring facial images of 530 celebrities that the researchers uploaded to YouTube, whereby the face was shown for 1 second, and then a white frame was shown, before another face was displayed.

The neural network was much better at identifying faces

With one of the hardest datasets, the researchers found that while humans could only identify a face with an accuracy of 0.19%, the neural network was able to identify the faces with an accuracy of 71.53%. In another dataset, humans achieved an accuracy of 2.5%, while the computer achieved an accuracy of 96.25%.

Of course, the neural networks found it harder to identify features in the images, depending on the complexity of the privacy protection technologies used to hide the image, so an image featuring 2x2 pixel mosaicing, where the squares were much smaller, would produce a more accurate results, while images that had been protected using 16 x 16 pixel mosaicing achieved much lower accuracy scores.

"The experiments in this paper demonstrate a fundamental problem faced by ad hoc image obfuscation techniques. These techniques partially remove sensitive information so as to render certain parts of the image (such as faces and objects) unrecognisable by humans, but retain the image's basic structure and appearance and allow conventional storage, compression, and processing," the researchers concluded in their paper.

"Unfortunately, we show that obfuscated images contain enough information correlated with the obfuscated content to enable accurate reconstruction of the latter. Modern image recognition methods based on deep learning are especially powerful in this setting because the adversary does not need to specify the relevant features of obfuscated images in advance or even understand how exactly the remaining information is correlated with the hidden information."

The researchers are warning designers of privacy protection technologies to make sure they measure how much information can be reconstructed or inferred from censored images using state-of-the-art image recognition algorithms, in order to risk a situation whereby images have to be entirely encrypted in order to ensure a user's online privacy.

© Copyright IBTimes 2025. All rights reserved.