Google using machine learning to shrink large images so they load faster and take up less space

Google is using artificial intelligence to help it load images on Google+ that are small yet high quality.

Google has developed a new technology that uses artificial intelligence to shrink large images so they load on webpages faster, reducing the burden on the Google+ social network by 30%.

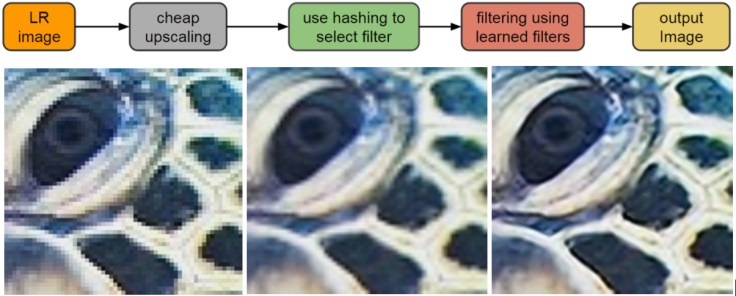

The technology, known as Rapid and Accurate Image Super-Resolution (RAISR), makes use of a type of computer science called machine learning to train computers to estimate what the quality of an image would look like if it were high resolution and produce that result, even if the actual image file itself is low resolution.

Over the past decade, the quality of cameras in smartphones has improved significantly, to the extent that many of these devices are now almost comparable to consumer point-and-shoot digital cameras.

This presents a problem – if everyone is trying to upload high resolution images to social media all day long at the same time, the images will take up a huge amount of space on the server. Each time a user requests to view one of these images on their device or computer, a bit of the server's bandwidth is used up. The bigger the picture, the more the bandwidth is used up, which is what makes websites load slowly sometimes.

So what can you do? Facebook and Twitter both have processes that automatically compress images as they are uploaded in order to get the files down to a size that the server can manage, but Google's researchers wanted to do better than that.

Teaching computers to predict a better quality image

Instead, they decided to develop computer algorithms that would enable computers to sharpen the image and enhance its contrast, because low resolution images present a big problem if you want to zoom in on still and text images on devices, and it is difficult to convert low resolution images and videos to play properly on HD screens.

To do this, the researchers created a database of 10,000 pairs of images, whereby one image in each pair is high resolution, while the other image is a low-resolution copy. They then trained the AI software to look at all the images and utilise filters using two different methods, so the computer could produce a new image where the detail is of a comparable quality to the original high resolution image.

The algorithms train the software to look at and detect features found in small patches of pixels in an image, such as brightness/colour gradients or flat/textured regions, by looking for the angle of an edge (the direction the line or feature is going in); how sharp the edge is (strength); and how directional the edge is (coherence).

"In practice, at run-time RAISR selects and applies the most relevant filter from the list of learned filters to each pixel neighborhood in the low-resolution image. When these filters are applied to the lower quality image, they recreate details that are of comparable quality to the original high resolution, and offer a significant improvement to linear, bicubic, or Lanczos interpolation methods," the researchers wrote in a Google Research blog post about the research in November 2016.

Google plans to roll out the function on Google+ and some Android devices this week, according to a new blog post by Google+ product manager John Nack. The internet giant says that RAISR has succeeded in displaying large images so that they use up 75% less bandwidth than before, and that the technology is already being applied to over 1 billion images per week.

When a user wants to see a high resolution image on Google+, their device requests only a fraction of the pixels from the image stored on Google's servers, RAISR is then used to produce an image that is comparable in quality to the original image, but much lighter on bandwidth.

Google's research paper on RAISR, entitled "RAISR: Rapid and Accurate Image Super Resolution" is slated to be published in the journal IEEE Transactions on Computational Imaging in the near future, but for now, a draft of the paper can be read on Cornell University Library's open source database.

© Copyright IBTimes 2025. All rights reserved.