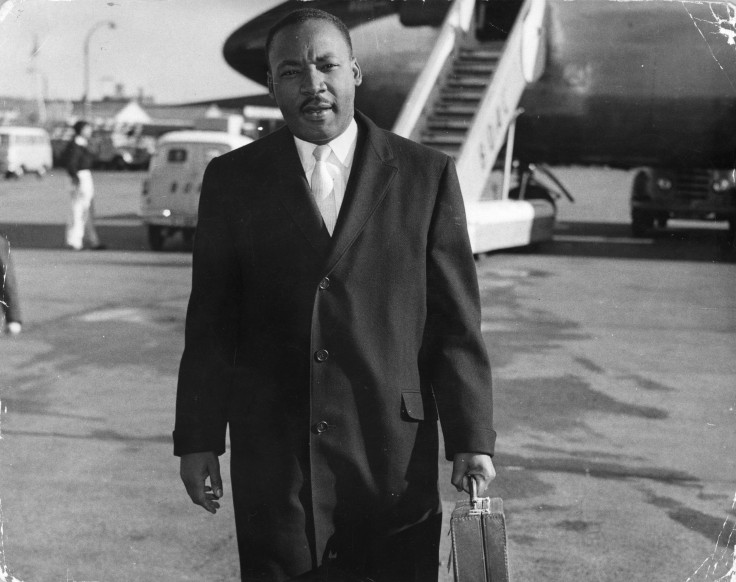

After Martin Luther King Jr Deepfakes, Experts Ask: Who's Safe from AI Resurrection?

When OpenAI stopped users from generating videos of Martin Luther King Jr. on its Sora platform, it was meant as an act of respect — a move to shield the civil rights icon's image from misuse.

But for many ethicists, the decision opened a more troubling question: in the age of artificial intelligence, who deserves protection from synthetic resurrection, and who is left to the mercy of the algorithm?

The controversy began after the King estate condemned a wave of deepfake videos that distorted the leader's image and words, including altered versions of his famous 'I Have a Dream' speech.

Some clips even fabricated scenes showing Dr King in conflict with Malcolm X, prompting his daughter, Bernice A. King, to call them 'disrespectful' and urge platforms to remove them.

OpenAI quickly announced it would 'pause' the use of Dr King's likeness while it strengthened 'guardrails for historical figures'.

The company said it recognised 'strong free speech interests' in depicting public figures, but added that families and estates should ultimately decide how their images are used.

The move was praised for its sensitivity, yet it also drew criticism for its selectivity. By shielding Dr King but not others, such as John F. Kennedy or Stephen Hawking, OpenAI had drawn a line that many see as ethically inconsistent.

Digital Dignity or Digital Divide?

Generative AI expert Henry Ajder welcomed the company's decision but warned that it exposed deeper inequities.

'King's estate rightfully raised this with OpenAI, but many deceased individuals don't have well-known or well-resourced estates to represent them,' he told the BBC.

Ajder cautioned against a future where only the famous are granted digital dignity, while everyone else becomes fair game for online manipulation.

His warning captures what many ethicists now fear: that AI's moral boundaries are being shaped by privilege, visibility and wealth.

While OpenAI has acted when public figures or their families intervened, such as Bernice King or Zelda Williams, daughter of the late comedian Robin Williams, ordinary people and lesser-known historical figures remain largely unprotected.

I concur concerning my father.

— Be A King (@BerniceKing) October 7, 2025

Please stop. #RobinWilliams #MLK #AI https://t.co/SImVIP30iN

AI, Law and the Battle Over Memory

Artificial intelligence is now both a shield and a threat. It can filter harmful content and detect deepfakes before they spread, but it also enables anyone to create hyper-realistic falsehoods at unprecedented speed.

Lawmakers are struggling to keep up. The EU's AI Act introduces new rules requiring synthetic media to be clearly labelled, but ethicists argue the real danger lies beyond regulation, in AI's power to rewrite cultural memory itself.

AI ethicist Olivia Gambelin has called deepfakes 'a dangerous rewriting of history', warning that digital reincarnations risk distorting truth and legacy alike.

For families, the impact is deeply personal. Zelda Williams described AI-generated recreations of her father as 'disturbing', while Bernice King said using her father's image without consent was 'disrespectful'. Between tribute and exploitation, the emotional line is perilously thin.

Consent Beyond the Grave

OpenAI told the BBC it is in 'direct dialogue with public figures and content owners' to develop clearer controls for Sora, promising multiple layers of protection to prevent misuse.

Yet critics say the right to consent should not depend on fame or family resources. While estates like those of Dr King or Robin Williams can intervene, most people cannot.

Experts including Ajder argue for a uniform global framework that treats digital likeness as part of a person's moral and cultural legacy, not merely creative material.

Without such rules, they warn, the future could divide the dead into two classes: those whose memory is protected, and those whose identities are left for AI to reinvent.

© Copyright IBTimes 2025. All rights reserved.