Trump Moves to Block State AI Laws, Leaving Safety Decisions to Tech Companies

Trump's executive order curbs state-led AI rules, shifting power over safety and transparency towards big tech firms

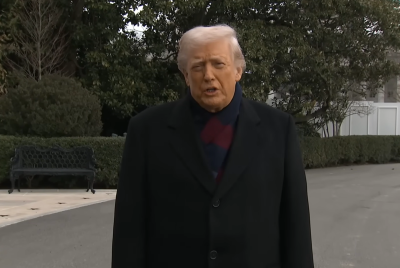

US President Donald Trump has blocked states from implementing their own artificial intelligence laws. The move comes as states such as New York push ahead with stricter safety and transparency regulations, warning that the absence of federal oversight puts the public at serious risk.

Political experts say the decision intensifies the conflict between federal authorities and states seeking to control AI development and prevent it from spiralling out of control.

State-Based AI Laws Junked: What's Next for AI?

Signed on Dec. 14, 2025, the federal government will oppose state AI regulations deemed 'onerous' or excessively strict.

According to NPR, the White House framed the move as a way to protect innovation and maintain US competitiveness globally. Google CEO Sundar Pichai echoed the sentiment, saying it will help tech companies expand overseas.

Over the past two years, several states have sought to fill the AI policy gap left by Congress. New York's proposed RAISE Act is among the most ambitious, requiring advanced AI developers to publish safety plans, disclose major incidents, and halt releases if their systems fail internal safety tests.

Supporters argue the bill imposes few new obligations, essentially formalising voluntary safety agreements already made public by leading AI firms. The focus is on frontier models that, if released irresponsibly, could cause large-scale harm, rather than ordinary consumer tools.

Trump's executive order does not automatically repeal these laws, but it signals that the Department of Justice may challenge them in court. Legal experts predict a prolonged struggle, as states assert their right to regulate technological risks in the interest of public safety.

Why This Favour Tech Companies More Than Consumers

Global leadership is important for the US, but so is consumer protection. Allowing the federal government to control AI regulations raises significant concerns. The executive order effectively blocks New York's state laws on AI failures, including data leaks or the generation of harmful content. With fewer mandatory disclosures, oversight will be limited if something goes wrong.

Tech firms, meanwhile, gain the upper hand. Without binding state-level rules, they can navigate compliance requirements and potential penalties more freely. While legal obligations remain, they are less strict than state laws, allowing AI development to progress faster than regulation.

Critics argue that the cost of preserving US competitiveness against rivals such as China is borne by the American public, who may now be at the mercy of AI companies. They warn that, rather than preventing AI-related dangers, the system will rely on damage control after problems arise.

What Role Tech Companies Now Play in U.S. AI Laws

If state-level regulatory control is weakened, technology companies will effectively hold the reins on AI safety. In practical terms, these firms will decide how to test their models, which risks to disclose, and when a system is safe enough for release. Companies may still use internal review boards, conduct red-teaming exercises, or adopt voluntary reporting frameworks. Some might even follow global standards or industry codes of conduct.

However, none of these measures are legally binding, and their implementation depends entirely on corporate goodwill. Without external oversight, commercial pressures could easily outweigh caution in the development and deployment of AI applications.

© Copyright IBTimes 2025. All rights reserved.