Kendra Hilty, Woman Behind TikTok's 'I Fell in Love with My Psychiatrist' Drama, Goes Viral Again — Here's Why

After heartbreak, Kendra Hilty found comfort in TikTok and an AI chatbot, until the lines between fantasy and reality blurred.

When therapy sessions spill onto TikTok and advice comes not from a licensed professional but from an AI chatbot, the lines between reality and narrative can blur in unsettling ways.

This is precisely the story that has unfolded with Kendra Hilty — an ADHD coach who has captivated millions with an ongoing, 25-part TikTok series documenting her unexpected feelings for her psychiatrist.

Framed as a personal diary, Kendra Hilty's (@kendrahilty) posts walk viewers through her appointments, emotions, and interpretations of the doctor's behaviour.

What began as an intimate glimpse into modern therapy culture has evolved into one of TikTok's most dissected dramas, sparking admiration from some for its 'raw honesty' and concern from others over its intensity.

By structuring each upload like a chapter — complete with cliff-hangers and unanswered questions — Hilty has created a compelling serial format that keeps audiences returning, ensuring her narrative dominates TikTok's mental health conversations.

A Private Drama Played Out Live

Hilty's storytelling doesn't stop with pre-recorded videos. She frequently goes live on TikTok, speaking directly to viewers as the saga develops in real time.

These livestreams have become an unfiltered arena for audience interaction, transforming strangers into participants in her personal life.

During these sessions, she fields probing questions and reacts instantly to both supportive and critical comments. The unscripted nature adds unpredictability, with her tone and mood often shifting according to the live chat.

The effect is a potent feedback loop — the more Hilty shares, the more invested and vocal her audience becomes. It's a volatile cocktail of empathy, judgment, and online performance.

Enter the AI 'Oracle'

Days after posting the first videos, the saga took an unexpected and even more controversial turn. Hilty revealed she had been speaking to AI chatbots, one named Henry, and another that calls her 'the Oracle'.

She describes Henry as 'working overtime', suggesting she consults the chatbot frequently for insights and interpretations. At times, she has read out AI-generated messages during livestreams, effectively letting the chatbot join her on air.

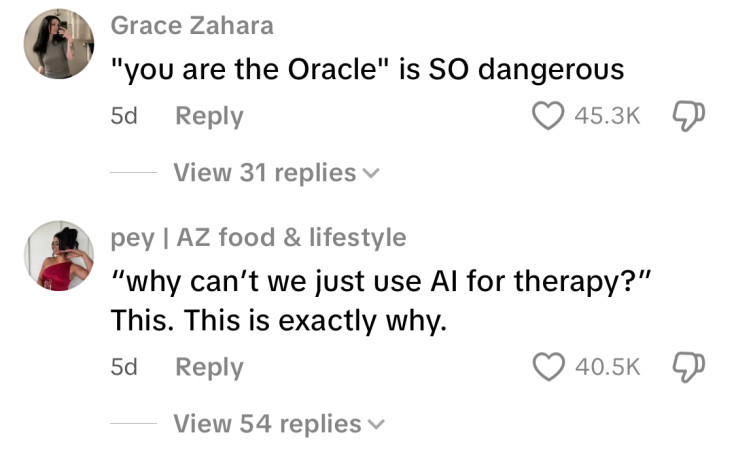

This blending of human-AI interaction with an emotionally charged personal story has shocked some viewers. Many now question whether the AI's responses are reinforcing her perspective rather than offering any form of objective analysis.

@hadtoclipthat #ifellinlovewithmypsychiatrist #fyp #olebrandselect #viral #kendrah #psychiatrist #aipsychosis

♬ original sound - hadtoclipthat

Why This Is Raising Red Flags

AI chatbots, such as ChatGPT, can simulate empathy and insight, but they are not human, unlicensed, and not bound by any duty of care. They rely on predicting the next likely word, not on assessing truth or applying therapeutic principles.

If you ask a leading question, such as 'Does this mean he's in love with me?', the AI will usually proceed from that assumption. The result can be an echo chamber that validates your framing, even if it is harmful or inaccurate.

Mental health professionals warn that this dynamic is precarious for individuals already experiencing emotional distress. An AI cannot intervene in a crisis, recognise urgent warning signs, or set ethical boundaries the way a trained clinician can.

When AI Becomes an Echo Chamber

Prolonged and emotionally intense interactions with AI can create what some researchers call 'AI psychosis', where a chatbot's agreeable responses deepen pre-existing delusions or distortions. The AI is not intentionally misleading; it is simply following the instructions provided in the prompt.

For someone caught in a cycle of obsessive thought or heightened emotion, this reinforcement can feel like confirmation from a trusted source. In reality, the AI is only mirroring back the user's language and logic.

Without human oversight, these interactions risk creating a closed feedback loop. Instead of helping a person work through their thoughts, the AI may end up cementing them, whether they are healthy or not.

A Cultural Crossroads — And A Cautionary Tale

Hilty's TikTok saga has evolved into more than just a personal account, becoming a cautionary tale about the intersection of therapy culture, influencer culture, and the growing trend of outsourcing emotional processing to machines.

Her openness and narrative skill are undeniable. Yet the inclusion of chatbots in her deeply personal storyline illustrates how quickly human reality can become entangled with algorithmic output.

As viewers follow this saga, one takeaway stands out: AI can provide conversation, but not care; words, but not wisdom.

And in matters of the heart and mind, knowing the difference can be critical.

© Copyright IBTimes 2025. All rights reserved.